ChatGPT's parent company Open AI has exhibited “human-level performance” in its GPT-4 model, a large multimodal model that aced on several professional and academic benchmarks.

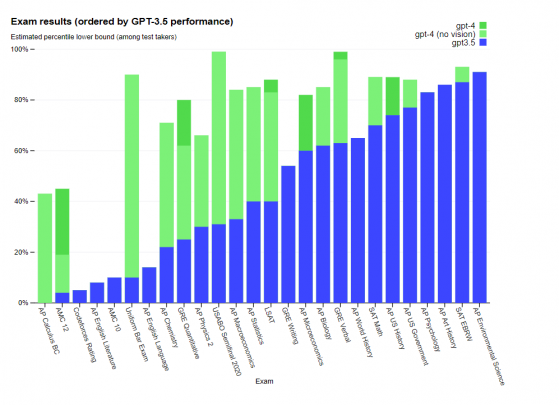

GPT-4 outperformed its predecessor GPT-3.5 by a significant margin as demonstrated by its ability to achieve a score in the top 10% of test takers on a simulated bar exam, while GPT-3.5 only scored in the bottom 10%.

While it is currently available to subscribers of ChatGPT Plus, OpenAI plans to launch GPT-4 capabilities through ChatGPT and its commercial API via a wait-listed release.

Announcing GPT-4, a large multimodal model, with our best-ever results on capabilities and alignment: https://t.co/TwLFssyALF pic.twitter.com/lYWwPjZbSg— OpenAI (@OpenAI) March 14, 2023

Aced in simulated exams

Addressing the capabilities of the new model, OpenAI said: “In a casual conversation, the distinction between GPT-3.5 and GPT-4 can be subtle.

“The difference comes out when the complexity of the task reaches a sufficient threshold - GPT-4 is more reliable, creative and able to handle much more nuanced instructions than GPT-3.5.

“To understand the difference between the two models, we tested on a variety of benchmarks, including simulating exams that were originally designed for humans.

“We proceeded by using the most recent publicly-available tests (in the case of the Olympiads and AP free response questions) or by purchasing 2022–2023 editions of practice exams.

“We did no specific training for these exams.

“A minority of the problems in the exams were seen by the model during training, but we believe the results to be representative”.

Exam results.

What’s more

OpenAI also evaluated GPT-4 on traditional benchmarks designed for machine learning models.

Encouragingly, GPT has also significantly outperformed existing large language models, alongside most state-of-the-art (SOTA) models which may include benchmark-specific crafting or additional training protocols.

Apart from textual data, GPT-4 can also accept visual inputs, however, the output will always be textual in nature.

Specifically, it generates text outputs (natural language, code, etc) given inputs consisting of interspersed text and images.

Read more on Proactive Investors AU