A report by the Network Contagion Research Institute (NCRI) has revealed a troubling trend within TikTok’s content algorithm, which simultaneously boosts content promoting the Chinese Communist Party (CCP) while supressing posts critical of the regime.

The NCRI combined an analysis of TikTok’s moderation practices, the nature and prevalence of CCP-related content, and user’s browsing data, survey responses, and cross-platform comparisons to come to its conclusions, which “present compelling and strong circumstantial evidence of TikTok’s covert content manipulation.”

TikTok in DOJ’s crosshairs

In April this year US President Biden signed a bill that would enable the banning of TikTok, a short-form video social media platform popular among younger users.

The platform is owned by Chinese company ByteDance – the TikTok owner was given nine months to divest itself from the app, on the grounds the app represents a security risk and a powerful tool for propaganda.

TikTok sued the government in May, stating Congress has “taken the unprecedented step of expressly singling out and banning TikTok” and describing the ban as unconstitutional.

While the ban itself is still pending, a slew of organisations including universities and government services banned the social media app from all non-personal devices at the state, local, and federal levels.

In light of this new report from the NCRI, the caution may have been prudent.

Shining spotlight on shady algorithms

NCRI’s report highlights the insidious ways in which algorithms can distract attention or influence sentiment.

The platform systemically shouts down, drowns out or simply obfuscates sensitive issues and topics that could be used to criticise the CCP, using a stable of travel influencers, frontier lifestyle accounts and other CCP-linked content creators to influence minds and change opinion.

Using the Tiananmen Square (NYSE:SQ) incident as an example, the NCRI’s research assessed the percentage of content that was either pro- or anti-CCP based on the 'Tiananmen' key search term.

On TikTok, anti-CCP content under the 'Tiananmen' search term constituted 19.6% of content vs 64.7% on Youtube and a whopping 56.3% on Instagram.

In contrast, 26.6% of 'Tiananmen' content was pro-CCP on TikTok, compared to 7.7% on YouTube and 16.3% on Instagram.

Interestingly, 45.6% of content on TikTok under the 'Tiananmen' search term was completely unrelated to the event in 1989, compared to just 3.3% on YouTube and 8% on Instagram.

“This reflects the 'seduce and subjugate' strategy… where content showing other Chinese cities and natural attractions was used to subsume mentions of Tiananmen,” the report explains.

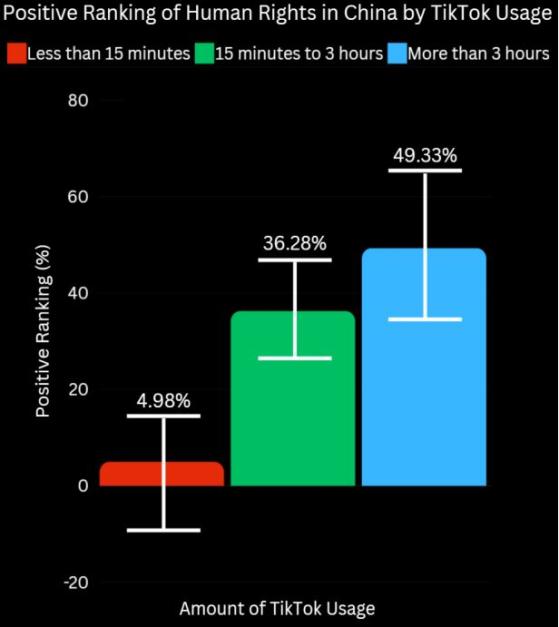

Concerningly, user sentiments about China’s human rights record and travel destination desirability are directly linked with how many hours they spend on the platform.

When asked whether China has a positive human rights ranking, only 4.98% of users who use TikTok for less than 15 minutes each day responded in the affirmative.

The number skyrockets in direct relation to time spent on the platform – a whopping 38.28% of respondents who use TikTok for between 15 minutes and 3 hours each day believe China has a positive human rights ranking, rising to 49.33% for the 3 hours+ group.

Similar changes were reflected in attitudes around China as a desirable travel destination, which rose from 21.2% in the low-use group to 41.2% in the 3-hour+ group:

What can be done about it?

The NCRI’s report paints a damning picture of both TikTok’s algorithmic propaganda activities and their effectiveness in influencing positive opinion of China as both a country and a government.

To combat the problem, the NCRI believes a Civic Trust funded by both platforms and the public will be necessary, to develop a systematic algorithm pressure-testing system to set standards and ensure transparency.

“This system would help identify when platforms are manipulating user perceptions, forcing individuals to adopt ideas they did not choose, often without their awareness,” the report reads.

“Such a framework is essential to protect the integrity of free speech and the sanctity of free will; in doing so, it will maintain the democratic values within which these platforms operate, at least in free societies.

“Furthermore, this Civic Trust must also develop fair and common consequences for platforms found to be undermining free expression.

“If social media algorithms are found to be subverting the very democracies that provide them the freedom to operate, they are both unjust and dangerous.

“There must be accountability and corrective measures to ensure that platforms are not exploited by state actors to erode democratic institutions and values.”

Read more on Proactive Investors AU